Though it sounds counter-intuitive, trying to customize flexibility in research spaces may actually inhibit the intended outcome in the long term, according to Niraj Dangoria, associate dean of facilities planning and management at Stanford School of Medicine, and David Bendet, associate principal at Perkins+Will Architects. Designers should focus instead on the people and modularity, even when future research needs are uncertain and can change rapidly.

Facility planners can do the right project and do the project right from the outset by not only providing a flexible framework and analyzing the needs of probable end users, but also creating a master plan, developing strategies to increase space utilization and efficiency, and making decisions early.

“Some elements of facilities offer the least flexibility—the foundations, the skin, and the like—but people are the most flexible factors, because they’re in and out and changing all the time,” says Dangoria. “As facilities developers, designers, and operators, we have to focus on understanding how we influence this flexibility.”

“The real question may be, what is the type of space that we’re planning to provide in order to support research needs in the future, and can we anticipate it?” adds Bendet.

Computational Space Needs are Increasing

Flexibility means different things to different user groups. Over the last decade the need has continued to increase for dry labs, computational labs, and non-traditional research support spaces, notes Bendet. This increases the need for buildings that can evolve in their digital technology integration and planning that can anticipate future space needs.

The brain research by two Stanford faculty members illustrates one extreme of how research needs can quickly balloon beyond what anyone anticipated. Their research, which involves observing millions of active neurons in live animals, began five years ago in a small, off-campus lab with three or four benches and a couple of rigs—customized, 6-foot-tall Unistrut® framed cabinets on air tables that hold equipment for experiments, as well as computers and recording devices, requiring 360-degree access.

These researchers partnered with other researchers and developed new techniques that quickly ramped up their research—from studying a small number of animals at a time to larger colonies.

Collecting more information meant they needed to capture it, as well; their data storage and processing needs leapt from one gigabyte at each station to 10 gigabytes.

“They had been doing wet bench research, and now all of a sudden they’re into this new dimension where they are generating tons and tons of data, of the kind that had never been seen before,” says Dangoria.

Although Stanford was planning a new data center, it was more than two miles from the researchers’ lab and would not meet their needs. Stanford was able to accommodate the researchers within its existing infrastructure, although it required greatly increasing energy capacity, giving them more space and three additional rigs, and providing two graduate students. The researchers’ team now includes up to 50 people using 10 gigabytes of data onsite, and they have invented a whole new science called optogenetics.

“These are just two researchers at Stanford, and we have to deal with the entire spectrum. But their research is so groundbreaking, is doing so many amazing things, that it is impossible to predict what the future is likely to be,” says Dangoria.

“How does a facilities professional even dream of supporting people like this? That is really at the heart of the challenge that we face,” he says.

Doing the Right Project

Stanford has built a number of facilities over the last 10 years, and the planning has continuously evolved during that time.

“We were going to build one building as a prototype, and the next six buildings were going to be exact replicas. Obviously, that doesn’t work in today’s world,” says Dangoria. “As we started looking at this issue, we have come to realize that the answer doesn’t lie in the space, although it may eventually. The answer has to begin with the faculty.”

A Stanford facility built in 2001, the Clark Center, with a highly open concept, was one of the most celebrated lab buildings at the time. In 2010, Stanford opened the Lokey Stem Cell Center. Both were very different projects with positive and negative outcomes.

The Clark Center is a glass-walled, three-story building with a keyhole-shaped courtyard in the middle. The intended function of the building is to integrate biology, medicine, and engineering—interdisciplinary biomolecular science. It was constructed so that all the scientific equipment and offices could be easily moved and rearranged. But the equipment is so customized that when a new faculty member wanted to rearrange, it cost three times as much as in a typical research building, says Dangoria.

“In this scenario, when you detour from the designed flexibility, and a fundamental part of the framework changes, the designed flexibility can become a constraint,” he says.

The Lokey Building, by contrast, is a simple building of square labs. Flexibility was created by using lab equipment of a generic design that can be made by different manufacturers. Gases are connected in the ceiling, so change-outs can be done more easily and cheaply. Good design led to creating a building based on a number of square feet per lab bench. The people in the building generate enough indirect costs to support the space that they are occupying.

“On the Clark Center, the flexibility created was customized specifically for bio-engineering research,” says Dangoria. “Accommodating different research modalities has resulted in a redesign of spaces, essentially tearing out the designed flexibility, which has proven to be very expensive. We redefined flexibility in Lokey with a focus on ‘modularity and generic.’ Lokey isn’t as glamorous as the Clark Center, but it has been a much more economical model of flexibility.

“It’s not that one is right and one wrong; each building responded to issues at the time. But over-customization of something as simple as flexibility may not be a good thing,” he adds.

Designing Around the End Users Instead

Planning teams typically have divided faculty members into three types: wet bench, dry bench, or clinical, but over the past year, Stanford has been engaged in a planning effort that has developed five basic faculty phenotypes with differing design needs:

- Lab scientist

- Computational faculty member

- Faculty focused on informatics and clinical outcomes

- Clinical trialists

- Clinicians

Even though faculty at the School of Medicine at Stanford are basically divided into four lines—university tenured, medical center tenured, non-tenure clinical educators (practitioners), and non-tenured research faculty—each of these groups can be divided among the five phenotypes, says Dangoria.

“And many of them end up doing work between one group and the other. No one person works across all five groups, but they may work across two or three.”

Traditionally, lab scientists have each received a bench and a desk, he notes, but perhaps we need to rethink this. In order to make research spaces more effective and to save money, Stanford is looking to give everybody in a lab group a desk but have them share benches. They are proposing eight benches shared among a group of 10 (the average lab size) so space needed per lab scientist is about 1,400 sf.

“The computational faculty is a group that is different but growing. We’re finding more and more that even our most traditional research scientists are starting to do computational work, and not everybody needs benches,” says Dangoria.

The informatics and clinical outcomes group is a smaller group, consisting of post-doctoral and doctoral students who strictly work with large amounts of data. They do only dry bench work on computers so they need much less space.

Researchers in the computational faculty group split their efforts between data-driven work (dry bench) and wet bench work, so they get four benches and 10 stations.

The clinical trialist group consists of nurses and research coordinators usually working in conjunction with developing new products, such as pharmaceuticals, devices, or therapies. They need spaces for blood draws, lab support, and exam rooms. Their work is dry, but a different type of dry than the computational phenotype, adds Dangoria.

The practicing clinician phenotype works in a clinic for some portion of the day seeing patients; they need only an office space.

“We’ve taken our faculty and put them into these five phenotypes so we can better understand how much space we’re going to need in the future,” explains Dangoria.

In order to figure out the average space needs for each phenotype, Stanford created a metric that took into account average group size, group breakdown, and the need for benches, lab support, workstations, and office space. The space-per-phenotype results were:

- Laboratory scientist – 1,382 sf

- Computational – 1,094 sf

- Informatics/clinical outcomes – 478 sf

- Clinical trialist – 560 sf

- Clinician – 111 sf

To test its metric, Stanford analyzed one of its faculty members who devotes 45 percent of her time to traditional wet bench science, 35 percent in computational, and 20 percent to informatics, and concluded that she would need about 1,100 sf.

“If we had simply called her a computational faculty member, because that is exactly what she is, she needs about 1,100 sf. And this was just one way for us to start ensuring that our hypotheses were working.”

Another factor to consider, he says, is that as more lab benches are shared, and space is tightened for each faculty member, the natural response will be sharing of resources and more interdisciplinary collaboration.

“It doesn’t make sense for each to have his/her own labs when they can share resources. When we try to squeeze labs and make space tighter, faculty will be more likely to look for commonalities.”

Also, more and more, faculty expect new facilities to provide “well-being” or lifestyle spaces—daycare, health clinics, fitness centers, and cafeterias—which provide users and building occupants additional convenience and encourage them to spend more time on campus.

The scientist of the future will also be more of a “mobile scientist,” not sitting at a research desk all day with test tubes and beakers in hand, says Bendet. They will rely a lot on digital information and monitoring technology, along with new styles of communication and new types of spaces, such as collaboration and meeting spaces within the labs, breakout spaces with immersive digital technologies, and the assumption that every space inside and outside will support diverse working styles and access with remote electronic devices such as iPads and iPhones.

“This ‘mobile scientist’ is really a research-anywhere concept that relies on technology,” he says. “We’re looking at different styles of workspaces (more dense environments with potentially less personal space) where more shared amenities increase space utilization. One aspect of increased utilization is higher employee density, which generates higher activity levels, collaboration, and increased creative energy—like a beehive of activity.”

Doing the Project Right

Delivering the best facility design also involves doing the project right from the outset, says Bendet. “The early stages of visioning and early design are really where we have the opportunity to bring the highest value to projects.”

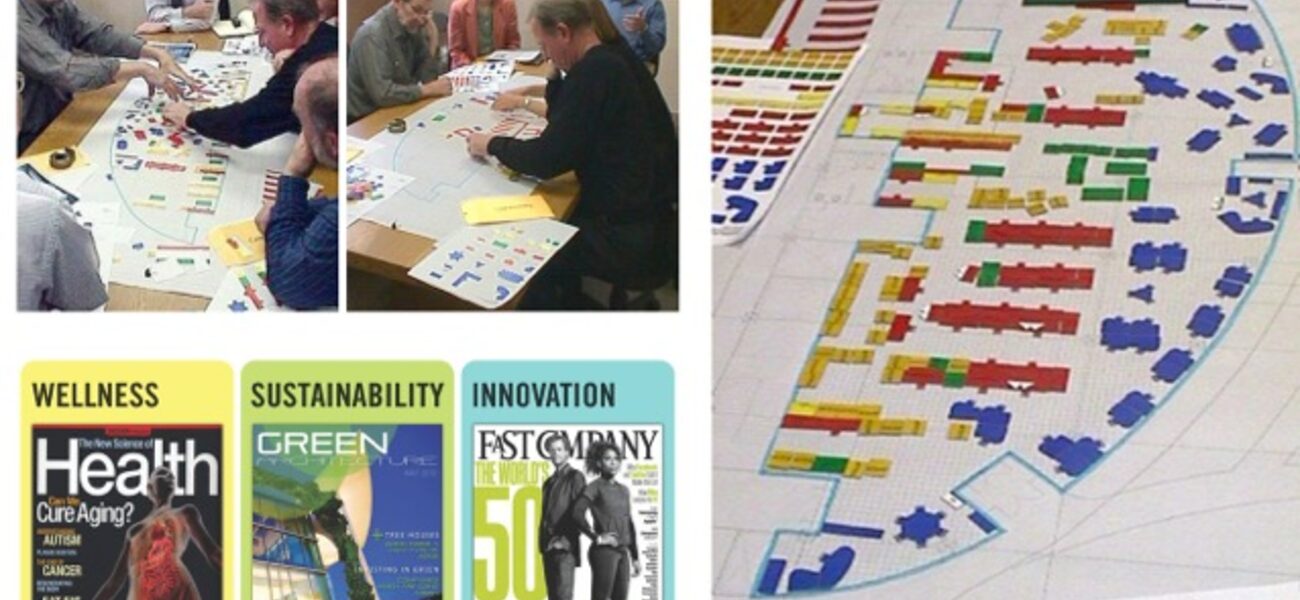

Establishing the right vision and goal for each facility can involve any number of visioning processes that bring together the stakeholders. One of the tools Perkins+Will uses is gaming exercises. There are different types of gaming exercises, all of them fun ways to engage stakeholders in visioning, programming, and planning for a facility.

The “headlines” exercise asks stakeholders: “If you were to have your facility published in a magazine a few months after it opened, what magazine would run the article and what would be the title?” This game is useful to establish the client vision and goals.

The “service blueprint” exercise asks participants to imagine, step by step, how they would access and use the space on a daily basis, starting before they even enter the facility. This game helps in programming the space requirements and flows of people, materials, and equipment.

Another exercise involves all users in placing “game pieces” representing rooms, casework, and/or equipment in different scenarios on the design plans. This collaborative game engages the users in discussions regarding space needs, as well as opportunities for sharing space and equipment among multiple users.

“This is when the stakeholders and users are able to voice their needs and wants, and look for sharing, collaboration, and interdisciplinary opportunities,” explains Bendet. “If you’re able to actively engage your key stakeholders and users in the design and programming process for a facility, you have the best opportunity to start the conversation about how the facility can support the user groups in working together now and in the future.”

At Perkins+Will, the design process is changing in much the same way research is changing, with a lot more computational analysis assisting the design and decision-making, adds Bendet. Computational design analytics drives innovation as planners are able to model novel design solutions, test their effectiveness, and demonstrate the added value to clients.

“We’re now using computational design analytics to develop efficient space programs, evaluate effectiveness of alternative mechanical/ventilation systems, and test sustainable design and energy efficiency strategies. It also allows more design variables to be applied more quickly. Computational design tools are getting sophisticated enough to model numerous design scenarios, and with the data provided reduce the decision-making risk to our clients.”

Lessons to Keep in Mind

With efforts to reduce space and save money, it’s important to keep in mind the need for private workspace, as people are more densely packed together, says Bendet. But this can occur in any type of space environment—in conference rooms, even in the cafeteria.

There still isn’t a foolproof way to assess space utilization, notes Dangoria, and it doesn’t involve just human presence in the labs and workspaces. Stanford has routinely done utilization assessments by seeing how often the labs are occupied, and every day/time is different.

“It always comes back as about 35 percent of the building is occupied at any given time. But the users tell us that their benches are active and engaged in science, even though they are not present.”

In conclusion, Dangoria and Bendet say it’s important to:

- Master plan for the future: Don’t try to predict. Provide a flexible framework.

- Increase space utilization and efficiency: This is not optional. Set targets and benchmark other facilities.

- Make decisions early: Define and communicate vision and goals. Promote innovation for best value.

“It’s about giving faculty what they need without being completely driven by their wants,” says Dangoria. “To provide the best tools for faculty to be the most successful researchers, make decisions early, but be sure to communicate your vision, and be sure to promote innovation, because that’s at the heart of the issue.”

By Taitia Shelow

This report is based on a presentation Dangoria and Bendet gave at Tradeline’s 2014 International Conference on Research Facilities.

| Organization |

|---|

|

Perkins&Will

|

|

Unistrut

|

|

Stanford University

|