Generative AI technologies like ChatGPT and DALL-E are expected to dramatically impact the design of science and research facilities by expediting creative ideation, improving process efficiency, and driving scientific innovation. Among other things, AI’s predictive capabilities will be able to help architects and engineers anticipate the rapidly evolving needs of modern science to create flexible, efficient spaces that support new research methods and equipment. Despite the many potential benefits of generative AI, there are concerns in the industry about the risks of adopting these powerful new tools in the design process of high-functioning facilities. There also are questions about how they will change the workflow for professionals in different sectors.

Many common misconceptions arise from the fact that the term “Artificial Intelligence (AI)” is frequently used as a media buzzword in reference to a broad range of different technologies—some of which are AI-based and some of which are not. A key thing to understand is that there are significant differences between traditional algorithm-based AI systems and new generative AI technologies like ChatGPT.

Traditional AI vs. Generative AI

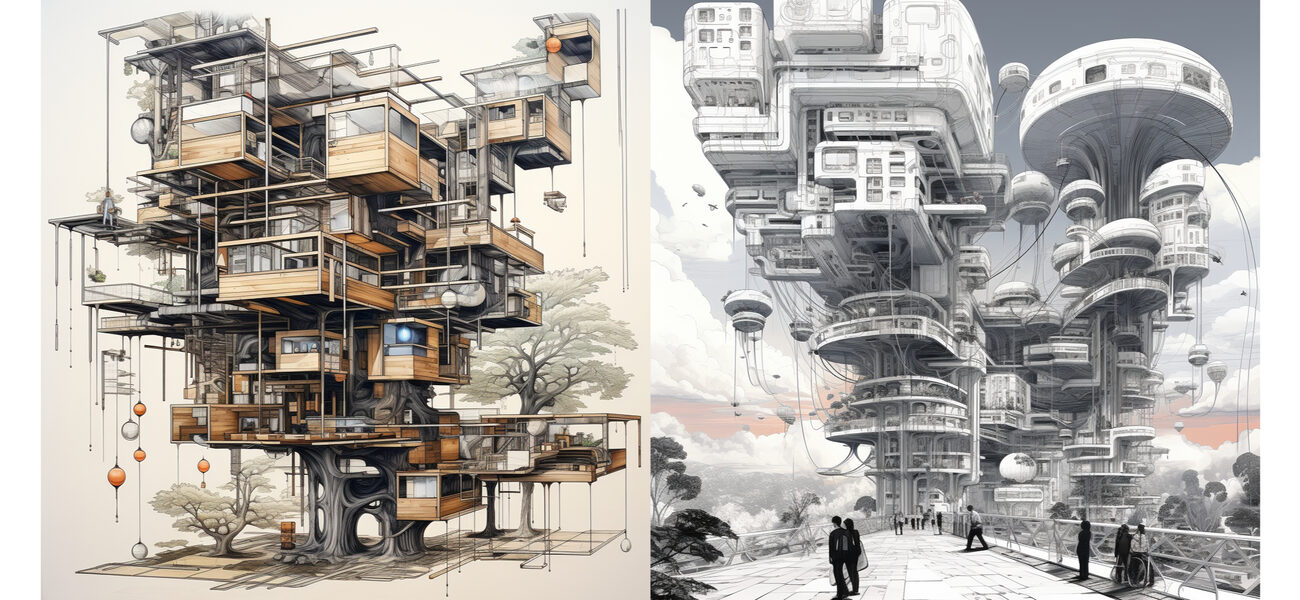

Traditional AI technologies have been around for decades and currently serve a wide range of functions in multiple industries including language processing (chatbots), pattern-recognition, fraud detection, and predictive problem solving. But newly emerging “generative AI” models are different from their predecessors in that they are trained to “learn” from massive datasets and ongoing user inputs so they can produce new content in the form of text, images, audio, video, and computing code. Examples of generative AI programs include ChatGPT, Bard, and DALL-E—a ChatGPT-based application that can generate images in response to text prompts.

These generative AI interfaces are compelling for designers because they allow users to generate high-quality text, images, and video content in a matter of seconds and refine the output through ongoing conversational prompts. As a result, there’s been a lot of buzz in the industry around the many ways generative AI can enhance design speed and operating efficiency of costly research facilities. There’s also a lot of confusion about what generative AI actually is and what it’s for. Ask a dozen architects and engineers how they’re using AI in their practice and you’ll get a dozen different answers.

So, in an effort to clear up some of the common misconceptions and learn how facility designers are actually using AI in the real world, Tradeline interviewed a cross-section of industry-leading professionals who specialize in science and research facilities to find out if—and how—they’re using AI technologies and what they see as some of the potential risks and benefits.

Opportunities and Benefits

There are numerous ways generative AI can be applied to improve the design and operation of high-cost research facilities. One of the most common applications among the design professionals we spoke with was the rapid generation of initial design options based on key variables like research type, building size, adjacencies, and equipment—which can be highly valuable for expediting the design and construction of complex, multidisciplinary labs.

“When it comes to using AI tools for design, I think there’s tangible value in being able to do something faster, because our clients pay us for our time and expertise,” says Kelley Cramm, senior associate at Henderson Engineers. “So if we can do things faster and more efficiently without compromising quality, it translates to lower costs for our clients."

“One thing that I think makes AI highly effective in this space is its ability to analyze massive amounts of data much, much faster than humans can,” says Brian Haines, chief strategy officer at FM:Systems. “But its success relies on having massive amounts of information available to the algorithms. If you ask one of these generative AI models to create a high-performing biodiversity lab along the coastline in Florida, but it doesn’t have access to large amounts of information to run its algorithms on, it’s not going to give you anything useful. It needs to be given a massive amount of data that it can iterate millions and millions of times over.

“Another important aspect of AI is that it needs to have a desired outcome,” says Haines. “To give you an example, I recently saw a video of a four-legged robot at UC Berkley, where the research team gave the robot only one task: Learn to walk. Of course, as a robot, it has no idea what walking even means. But they gave it a desired outcome and then essentially used the robot to fail probably hundreds of thousands of times in one hour to develop a massive dataset of learnings. By the end of that hour, it was walking perfectly. It was successful because A) it was given that desired outcome, and B) it was able to build a gigantic dataset quickly so it could learn from its failings until it got it right. I think this same model applies to AI and buildings. Going back to my example, if I ask an AI model to create an advanced biodiversity lab near the coastline in Florida, it’s not going to be successful unless it can access large amounts of data. But if it has access to things like the past 50 years of weather data and the design features of similar labs in similar climates, it can try different iterations a billion times over to learn from its failings and then generate something rather incredible at the end of the process.”

Machine learning algorithms also hold promise for optimizing a building’s ongoing operations based on energy use, occupancy levels, and weather conditions to reduce costs and improve sustainability. Operations data gathered over time from sensors and BMS software could also help predict future facility needs based on emerging trends in the client’s field of research.

“There’s a massive gap in our industry where once a building is designed, built, and commissioned into operation, no one goes back to check on how it’s operating,” says Haines. “Architecture and engineering firms deliver these buildings and move on to the next project, because they’re in a service industry that works on an hourly basis. So it’s not conducive for them to go ask clients they had 10 years ago if they can come back into their building to see how it’s operating and if it was successful. But, for example, our software alone has more than 3 billion sf of real estate in it. And, since 2015, we’ve been adding occupancy sensors, algorithms, and analytics tools that are allowing us to look at the data gathered from massive real estate portfolios belonging to some of the largest organizations in the world. Now we can apply all of those learnings to better understand what kind of equipment is being used, how much space is required, and what their energy use is so we can produce models that tell us how those facilities are operating. The ultimate goal of which is to create smart buildings that can learn to self-optimize while also feeding data forward to inform the design process of future buildings.”

Additionally, generative AI tools will enable more dynamic interactions between designers and facility owners by giving owners an easy way to conceptualize, explore, and even adjust design options.

“One of the big challenges we face in designing science facilities, is that we’re not researchers, and the researchers aren’t design engineers,” says Cramm. “We live in very different worlds and we don’t understand each other’s processes. A lot of design engineers will say: ‘I’m just going to ask the scientists what they need.’ The problem is that the scientists don’t always know. So if these generative AI tools can help me quickly understand their scientific processes, then I can give them a better building that makes their research safer and more efficient.”

“I think there’s good potential for using AI in the early ideation process, which can sometimes be a bit of a challenge for architects and designers when there are a lot of technical parameters,” says Jeffrey Zynda, principal science and technology practice leader at Perkins&Will in Boston. “But these new AI engines deal with that fairly rapidly. Being able to correlate different research typologies and rapidly create design options for the specific types of environments and features they need based on immense sets of data is very interesting.”

Risks and Challenges

While many of the fears and misconceptions around AI are unfounded, incorporating these powerful new AI tools in the design process of high-performance labs is not without risks and challenges. One of those challenges is maintaining integrity and establishing accountability.

“One phrase you hear all the time when you’re training as an architect is the concept of responsible control,” says Keith Fine, principal and director of innovation at the S/L/A/M Collaborative (SLAM). “When you stamp a set of drawings with your stamp, you’re taking responsible control of that product as a design professional. So I think that whatever you’re doing with AI—whether it’s in relation to technical details or the quality of the documentation—there has to be a QA/QC component at some point where you maintain responsible control as a professional. Ultimately, these are just tools that you’re responsible for guiding. If you just run something through an AI engine and then put it out, you’re really disrespecting what your stamp stands for.

“That said, you could also take the position that, as a principal or stamping architect, you don’t look at every single piece of verbiage and every arrow on every detail before it goes out,” says Fine. “There’s a component of being in control of your product and firm where you have built-in QC checks and a dedicated staff that you train to do that. So then the question becomes: Can these tools be trained to take more of that role? And is that acceptable within the definition of responsible control? I haven’t talked to a lawyer about that, but it’s certainly an issue AI will be pushing to the fore.”

Also, the more sophisticated these systems become, the harder it is to detect AI-generated content. This can become problematic when it comes to detecting errors and validating results. It also raises ethical questions around content authenticity, copyright issues, and plagiarism.

“As design professionals, we have to be very careful regarding copyright information we’re entrusted with and the types of data we provide,” says Fine. “At this stage, I think AI should be considered more of a brainstorming tool that has to be taken with a grain of salt in order to protect the authenticity of what you’re producing."

“What worries me a little bit in all of this is that we can’t easily backcheck the system, because it’s pulling from a dataset so large that it can’t be processed by the human mind. So how do we quality check the AI when we can’t keep up with it?” says Zynda.

Another challenge is managing the implicit (and/or explicit) bias embedded in the algorithms and datasets by developers who create them, which inevitably influences their outputs.

“Whoever develops the algorithms and does the data-mining that forms the basis of these engines is obviously sitting in a bias role, either implicitly or explicitly, regarding what the outcomes could be,” says Zynda. “And I think that’s where some of the challenges are going to arise when it comes to using AI tools for highly technical applications.”

Notably, one of the biggest challenges facing organizations is allocating the time, budget, and resources to research and test different AI models. Most of the firms we spoke to had a team or staff member dedicated to figuring out which AI technologies fit into their workflow and how to best utilize them. In contrast, some firms may not have the bandwidth for the early-adopter learning curve, while others are taking a wait-and-see attitude.

“It all sounds like a great idea until you have to spend money and manage the costs of disruption,” says Haines. “That’s something we tend to forget. You have to ask: What’s the tolerance-level in your organization for investing, not just the money, but also the time and staffing resources to figure out how to use these technologies in your workflow process?”

Strategies and Applications

While there are numerous ways AI tools can be used to optimize lab design and operations, the applications that are most likely to have an impact in the near term are those that improve energy efficiency and sustainability, and applications that automate or augment iterative elements of the research process itself.

“I think the power of AI will come from being able to look at problems differently using different datasets that we don’t naturally gravitate towards as humans,” says Zynda. “If you look at the evolution of, say, grant teams—20 or 30 years ago, it was usually just a single chemist or biologist pursuing a research grant. Now we have these large multidisciplinary teams that include a biologist, a chemist, a data scientist, an economist, and other specialists. When we extrapolate that into an architectural solution, rapid multi-perspective problem-solving becomes increasingly important due to the complexity of the problems we’re trying to solve. I think that’s where we’re going to see a separation in the industry between firms that are leveraging AI technologies and those that are not.”

“Generative AI tools are another evolution in our business where people will eventually need to get on board if they want to stay relevant,” says Cramm. “I would liken it to the transition from manual drafting to AutoCAD. The industry reached a point where if you weren’t using AutoCAD, you just couldn’t keep up. The same thing happened with Revit.”

“I think generative AI will drive us to start front-loading massive amounts of information during the design process so we can put it through these engines, especially on more technical projects,” says Fine. “And the production process is probably going to involve more of a QA/QC interface. The next several years are going to be very interesting as we combine these new visualization tools with this maldirected metaverse we have. It’s definitely going to be a bit of a mini private space race to get where we’re going next, but I think we just have to get excited about exploring this new frontier and enjoy the ride.”

By Johnathon Allen

Resources:

Generative AI tools are driven by conversational queries and/or prompts that are entered by the user in the form of text, images, videos, designs, and/or musical notes. Machine-learning algorithms respond to these prompts with new content in a wide range of media, including text, images, music, code, voice, and synthetic data—depending on the tool. Here are some of the latest generative AI tools for different applications:

Text: ChatGPT; Bard

Images: Dall-E 2; Midjourney

Music: MuseNet; Amper

Voice: Podcast.ai; Descript

Code: GitHub Copilot, Codex

The Big Three

Developed by OpenAI and released in 2002, the popular ChatGPT model is a generative AI chatbot that provides an interactive, conversational-like exchange that allows users to ask questions in a narrative format, focus in on relevant details, refine context, provide ongoing feedback, and adjust the length, style, detail level, and language of the output.

Built using OpenAI’s ChatGPT model as a foundation, Dall-E 2 is an example of “multimodal” text-to-visual AI, which is trained to identify relationships across different media types including text, visuals, and audio. The model, which now serves as the engine for Bing’s Image Creator, uses deep neural networks to generate digital images from language-based prompts that allow users to generate and alter new images in different styles.

Based on Alphabet’s proprietary LLM (PaLM 2), Bard is a generative AI model similar to ChatGPT in that it’s trained on hundreds of billions of parameters for processing a wide range of natural structures from languages to proteins. One key distinction between ChatGPT and Bard is that Bard can draw additional information from the internet (though it’s not always accurate), where ChatGPT does not (the current version’s dataset ends at September 2021).